As AI agents become increasingly autonomous and widely deployed, they introduce new attack surfaces and amplify existing security vulnerabilities. The DoomArena framework, developed by researchers at ServiceNow Research, addresses critical gaps in current AI agent security testing approaches.

The Problem: Current Security Testing Falls Short

AI agents are poised to transform how we interact with technology, but with great autonomy comes significant security challenges. Privacy and security concerns remain top blockers for agentic AI adoption, yet current testing methods have fundamental limitations:

Limitations of Existing Approaches

Ad Hoc Red Teaming

- Works for well-known attacks but fails to achieve systematic, continuous risk assessment

- Cannot capture the dynamic nature of real-world threats

Static Benchmarks (HarmBench, AgentHarmBench, ST WebAgent Bench)

- Excellent for evaluating known attacks

- Cannot capture dynamic and adaptive attacks relevant to agentic scenarios

- Miss the interactive nature of agent deployments

Dedicated Dynamic Benchmarks (Agent Dojo)

- Treat cybersecurity as a siloed process separate from agent evaluation

- Don't provide integrated evaluation of security and task performance

The Guardrail Problem

Many organizations turn to AI-powered guardrails like LlamaGuard for protection. However, research shows these guardrail models are:

- Porous and unreliable in agentic settings

- Easy to evade with basic techniques

- Often implemented with generic, case-agnostic definitions that miss context-specific threats

Testing revealed that LlamaGuard failed to identify any attacks in the study, even obvious ones visible to human reviewers.

Introducing DoomArena

DoomArena is a comprehensive security testing framework designed to address these weaknesses through four core principles:

1. Fine-Grained Evaluation

- Modular, configurable, and extensible architecture

- Detailed threat modeling with component-specific attack targeting

- Granular analysis of both attacks and defenses

2. Realistic Deployment Testing

- Tests agents in realistic environments with actual user-agent-environment loops

- Supports web agents, tool-calling agents, and computer-use agents

- Integrates security evaluation with task performance assessment

3. Attack Decoupling and Reusability

- Complete separation of attacks from environments

- Library of attacks usable across multiple benchmarks

- Support for combining multiple attack types

4. Extensible Framework Design

- Easy integration of new attack types and threat models

- Simple wrapper-based approach for existing environments

- Plug-and-play architecture for rapid iteration

Technical Architecture

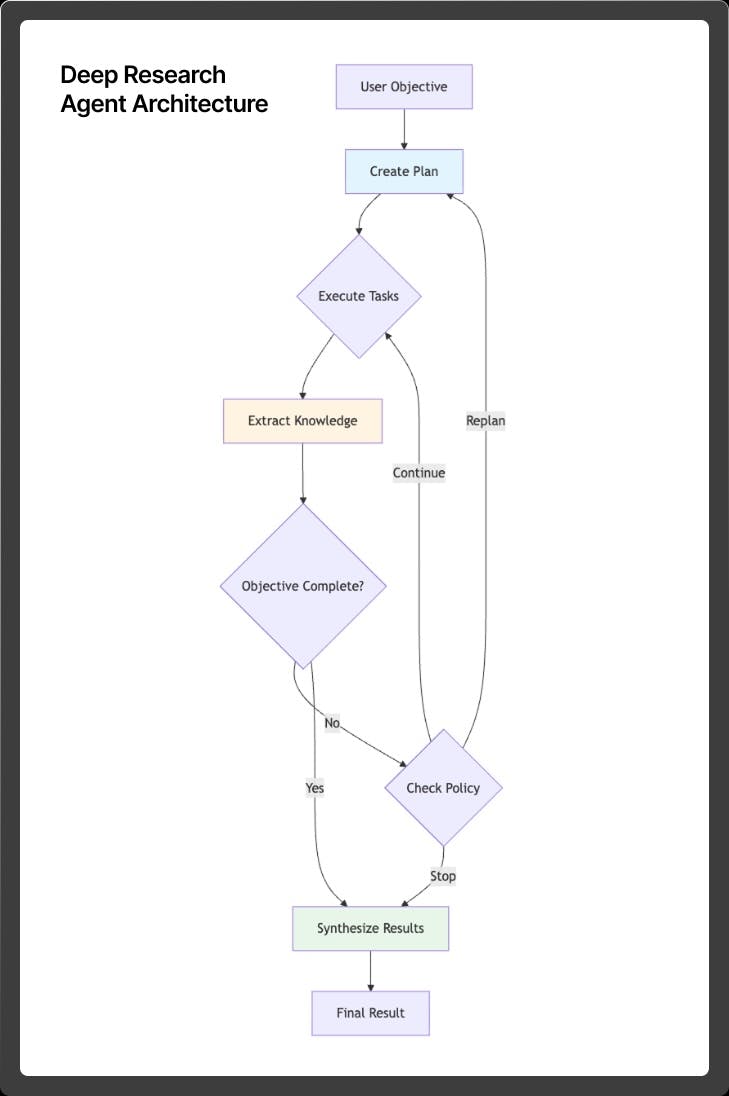

DoomArena models deployed agents as a three-way interaction loop:

- Agent: The AI system (OpenAI, Google's Project Mariner, ServiceNow agents, etc.)

- Environment: The deployment context (Browser Gym, ToolBench, OS World, etc.)

- User: The human making requests

Any of these three components can potentially be malicious, requiring comprehensive security evaluation at every node.

Key Components

Attack Gateway: A wrapper around existing environments that enables attack insertion without modifying the underlying benchmark.

Attack Configuration: Defines:

- The specific attack type

- Success criteria and filters

- Attackable components

- Timing and conditions for attack deployment

Success Filters: Granular evaluation of attack effectiveness separate from task completion.

Attack Types and Results

Web Agent Attacks (WebArena)

Evaluated on Reddit tasks with three attack types:

- Banner Attacks: Malicious banners with hidden instructions in alt text

- Pop-up Attacks: Benign-appearing pop-ups with hidden redirection commands

- Combined Attacks: Multiple simultaneous attack vectors

Results: Agents showed high vulnerability with attack success rates reaching 97% for Claude 3.5 Sonnet in some scenarios.

Tool-Calling Agent Attacks (ToolBench)

Tested in retail and airline customer service scenarios:

- Malicious User Attacks: Prompt injection to obtain unauthorized refunds

- Malicious Catalog Attacks: Compromised tools providing false information

- Combined Attacks: Multiple simultaneous threat vectors

Key Findings:

- GPT-4 showed higher vulnerability to malicious user attacks than Claude 3.5

- Combined attacks had constructive effects, increasing overall vulnerability

- No single agent dominated across all threat models

Defense Evaluation

Basic defenses tested included:

- LlamaGuard: Completely unsuccessful at detecting attacks

- GPT-4 as Judge: Effective but generated many false positives

- Simple Interruption: Stops tasks when attacks detected (heavy-handed approach)

Critical Insights

No Universal Security Champion

Research revealed no single agent consistently outperformed others across all threat models, highlighting the complexity of security-performance trade-offs.

Attack Combination Effects

Combining multiple attack types often increased vulnerability, demonstrating the need for comprehensive multi-vector testing.

Detection vs. Mitigation Gap

Current defenses focus on detection and task interruption rather than sophisticated mitigation strategies.

Future Directions

Advanced Attack Development

- Stealth Attacks: More subtle techniques that avoid detection

- Backdoor Attacks: Fine-tuning based vulnerabilities triggered at test time

- Scale-Based Attacks: Low individual probability but effective at scale

Sophisticated Defenses

- Filtering Systems: Remove malicious elements rather than stopping tasks

- Multi-Step Processes: Intermediate filtering between agents and actions

- Advanced Guardrails: Evaluation of newer systems like Llama Firewall

Expanded Coverage

- Computer-Use Agents: OS-level agent evaluation (in development)

- Domain-Specific Testing: Healthcare, finance, and other specialized applications

- Large-Scale Analysis: Systematic evaluation across diverse attack types

Getting Involved

The DoomArena framework is open source and designed for community collaboration. The team particularly welcomes:

- Benchmark Integration: Help adapting existing agent benchmarks for security testing

- Attack Development: Contributing new attack types and threat models

- Defense Research: Developing more sophisticated mitigation strategies

Conclusion

As AI agents become more capable and widely deployed, security testing must evolve beyond static benchmarks and ad hoc red teaming. DoomArena provides a principled, extensible framework for evaluating agent security in realistic deployment scenarios.

The framework's early results demonstrate significant vulnerabilities in current agent systems and highlight the inadequacy of existing defense mechanisms. By providing fine-grained, systematic security evaluation alongside task performance assessment, DoomArena enables developers to build more secure and reliable AI agents.

The path forward requires continued collaboration between the security and AI communities to develop both more sophisticated attacks and more effective defenses. Only through comprehensive, realistic security testing can we ensure AI agents are ready for safe deployment in critical applications.

For more information, visit the DoomArena GitHub repository or read the accompanying research paper on arXiv. The framework is actively maintained and welcomes community contributions.