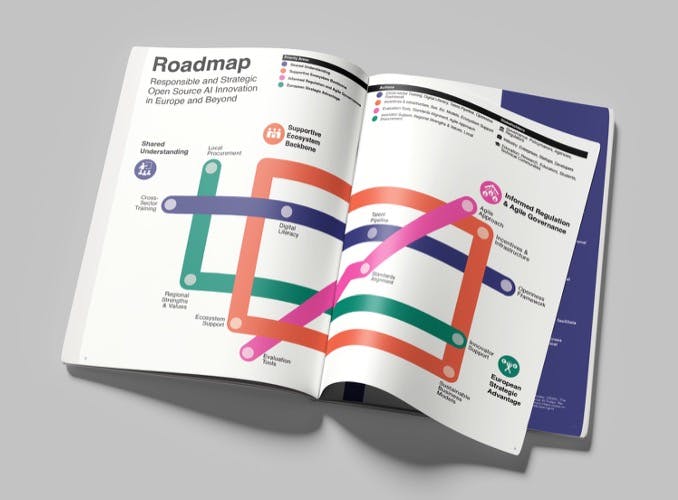

Harnessing Open Source AI for Europe’s Digital Future

Europe is at a defining crossroads in shaping the future of artificial intelligence. Harnessing open-source AI for Europe’s digital future means creating innovation that is not only powerful but also ethical, transparent, and inclusive. The recent Roundtable on Open Source AI, co-hosted by the AI Alliance and ETH Zurich’s AI Ethics and Policy Network, brought together experts from across governments, academia, and industry to explore how open, trusted, and socially beneficial AI can drive growth while upholding Europe’s democratic values. As the EU AI Act and related frameworks evolve, discussions centered on fostering responsible innovation, digital sovereignty, and interoperability across borders. The resulting recommendations emphasize the need for a shared understanding of open-source AI, stronger ecosystem infrastructure, agile governance, and leveraging Europe’s strategic strengths. Together, these efforts aim to position Europe as a global leader in responsible and open AI development, ensuring that technological progress serves people, communities, and society at large.