On June 5, the AI Alliance and ETH Zurich’s AI Ethics and Policy Network co-hosted a Roundtable on Open Source AI, bringing together over 30 participants representing 17 AI Alliance members, alongside leading experts from governments and international organizations. The roundtable sought to advance dialogue on the importance of open, socially beneficial, and trusted AI as a driver of growth and innovation in Europe. A central focus was how policy frameworks can enable open-source AI while balancing safeguards and regulation with innovation.

This conversation was both timely and essential: open-source AI technologies are already transforming industries and accelerating innovation across Europe and beyond. As AI adoption intensifies, policymakers, researchers, and institutional leaders must collaborate to address the complex policy, ethical, and governance challenges of this rapidly evolving landscape. Europe now stands at a pivotal moment—striving to combine ambitious innovation and digital sovereignty with its enduring commitments to ethics, transparency, and democratic values.

Held under the Chatham House Rule, the roundtable examined the strategic importance of open-source AI within Europe’s evolving policy environment, including the implementation of the EU AI Act, as well as broader global dynamics shaping digital sovereignty and interoperability. Discussions explored how open-source AI can foster innovation, enhance competitiveness, and strengthen Europe’s capacity for ethical, transparent, and sustainable AI development.

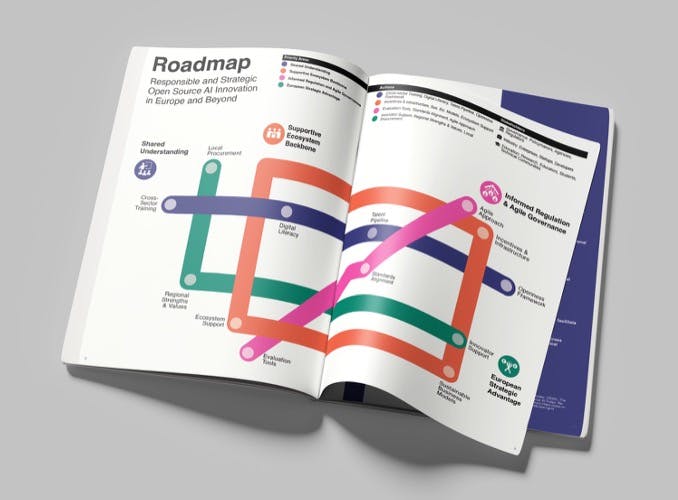

The event identified four key priorities for advancing responsible and strategic open-source AI innovation:

(1) establishing a shared understanding of open source AI

(2) building a supportive ecosystem backbone

(3) driving informed regulation and agile governance

(4) leveraging Europe’s strategic strengths

These recommendations offer actionable insights for policymakers, researchers, and industry leaders committed to advancing Europe’s leadership in trustworthy AI. The full AI Alliance report on Responsible and Strategic Open Source AI Innovation in Europe and Beyond expands on these themes and provides guidance for stakeholders globally.

To build on these recommendations and strengthen the partnerships established during this event, we invite developers, researchers, policymakers, and thought leaders to explore the report’s findings and to join our AI Alliance Working Group on Open Source AI Governance and Impact in Europe which meets every three weeks from 4–5 pm CEST (10–11 am EST).

The Working Group will continue the dialogue on pressing themes shaping the future of open and responsible AI. Topics include the need for greater transparency around data provenance, copyright, and remuneration in open-source models; the development of trustworthy and agentic AI systems by design that uphold safety and accountability; and the implications of generative AI for the future of work and education. Together, these ongoing discussions highlight the importance of aligning technological innovation with societal trust, inclusion, and long-term public benefit.

Join us in building the future of responsible AI - open, ethical, and grounded in strong governance - to ensure innovation truly serves people and society.