GEO-Bench-2: From Performance to Capability, Rethinking Evaluation in Geospatial AI

By IBM and ServiceNow and the AI Alliance Climate & Sustainability Working Group

A New Era for Geospatial AI

Geospatial Foundation Models (GeoFMs) are large-scale AI models trained on diverse Earth observation data to support multiple geospatial tasks. They are transforming how we understand and manage our planet. But as these models grow in complexity and capability, one question becomes critical:

How do we measure progress in a consistent, meaningful way?

Current efforts face limitations in scope, licensing, reproducibility and usability, hindering adoption and comparison. Today, ahead of the full release, we unveil the first results on GEO-Bench-2 — a benchmark designed to evaluate GeoFMs across diverse tasks and datasets. With GEO-Bench-2 we aim to provide a shared framework that accelerates innovation and collaboration in geospatial AI.

What Makes GEO-Bench-2 Unique?

GEO-Bench-2 is more than a dataset collection. It’s a comprehensive evaluation ecosystem built to answer open research questions and guide the next generation of GeoFMs.

1. Diverse Dataset Aggregation

We’ve aggregated 19 datasets, organized into 9 subsets, each targeting specific capabilities aligned with critical research challenges. Datasets have been carefully reviewed to have a permissive license and high data quality as well as sub-sampled, if necessary, to decrease computational resources needed for evaluation.

Table 1 – GEO-Bench-2 datasets and capabilities.

2. Flexible Evaluation Protocol

Our protocol ensures fair, comparable, and meaningful evaluations, regardless of model architecture or pre-training strategy. It accounts for both Hyper Parameter Tuning (HPO) and repeated experiments to allow models to adapt to the specific task and account for variability across different runs. This flexibility fosters innovation while maintaining scientific rigor. To ensure an apple-to-apple comparison when aggregating across datasets, the protocol also recommends dataset-wise min-max normalization, as well as the use of bootstrapped interquartile mean to exclude outliers and account for uncertainty.

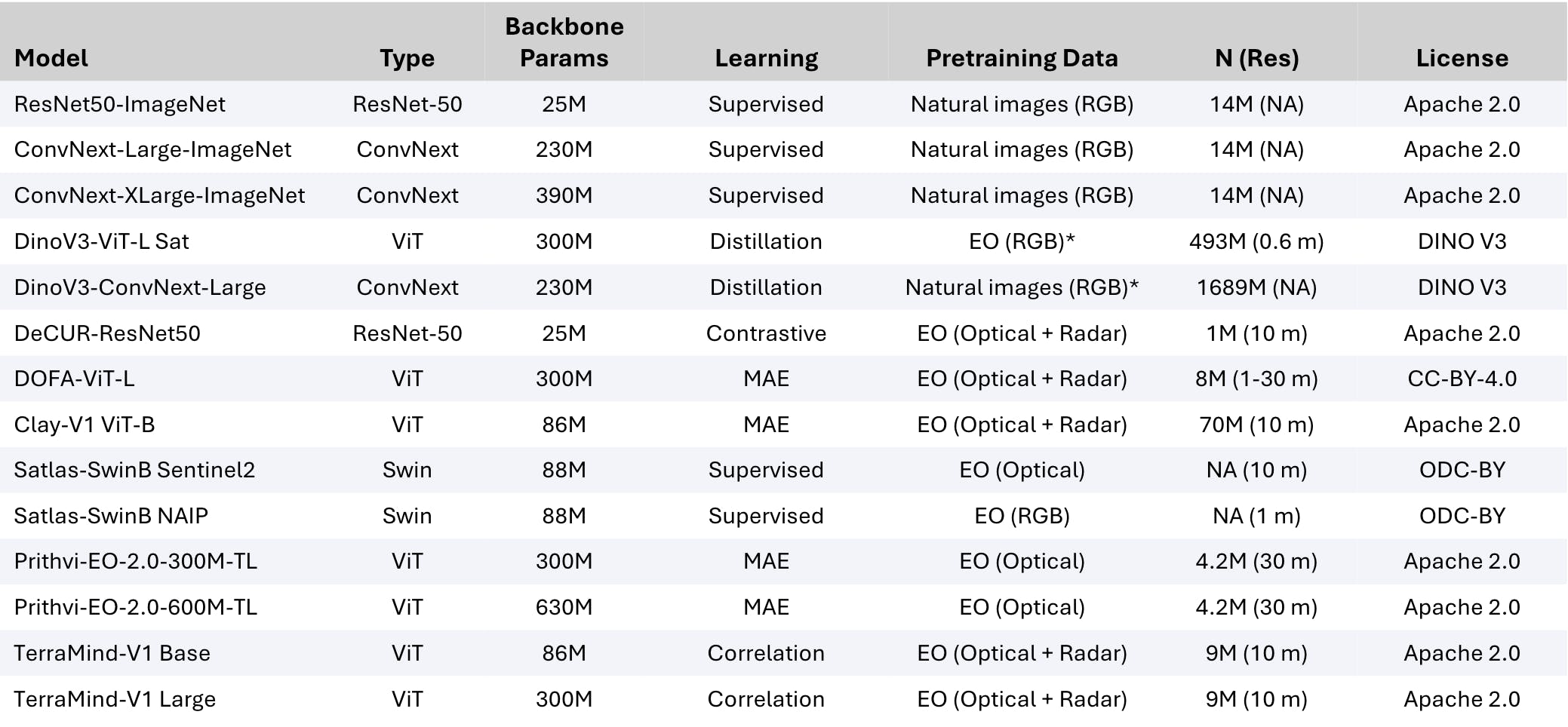

3. Extensive Baseline Experiments

Following the GEO-Bench-2 protocol, we ran large-scale experiments using TerraTorch with more than 15,000 runs across popular open GeoFMs and compared to latest open Computer Vision approaches (See Table 2) to uncover:

- Which models excel at which capabilities?

- Where do they fall short?

- What challenges lie ahead?

Table 2 – Models considered in the GEO-Bench-2 experiments. Optical indicates use of EO data beyond RGB and * denotes the use of proprietary data. Backbone params indicate an approximate count. N: number of training samples in million of images; Res: spatial resolution in meters.

Table 3 – Models ranking across all the different GEO-Bench-2 capabilities.

Table 3 shows results using a full finetuning approach. Clay V1, which uses a smaller patch size to retain more information at a higher computational cost, takes the lead in the Core capability, followed by ConvNext-based models. As expected, general computer vision models and those focusing on RGB-only data in pretraining (i.e. DINO V3 and ConvNext) top the <10m resolution and RGB/NIR capabilities. In contrast, EO-specific models such as TerraMind and Prithvi-EO-2.0, pretrained for this type of data, lead in multispectral-dependent tasks. These are critical for applications like agriculture, environmental monitoring, and disaster response, where RGB data alone is often insufficient.

4. A Public Leaderboard

Progress thrives on transparency. Our leaderboard tracks model performance on GEO-Bench-2, enabling the community to benchmark, compare, and improve. The leaderboard allows to dive into specific capabilities and provide settings to explore both full finetuning and frozen encoder approaches.

Figure 1 – GEO-Bench-2 leaderboard outline.

5. Integration with ready-to-use tools

GEO-Bench-2 comes as its own python package, available on Github with all datasets being stored in TACO format that will be available soon on the AI Alliance Hugging Face page. We built also a strong integration with TerraTorch, the most popular tool to finetune and experiment with GeoFMs. This allows to launch the benchmark, including HPO and repeated experiments, over GEO-Bench-2 datasets with just a simple config. Although this will make experimentation easier, this is not a hard requirement to submit scores to the leaderboard and users can use their favorite tools.

Why It Matters

By providing a shared yardstick, GEO-Bench empowers researchers and industry alike:

- Researchers gain clarity on what works and what doesn’t.

- Companies can benchmark solutions before deployment.

- Society benefits from better tools for climate resilience, disaster response, and sustainability.

Join the Movement

GEO-Bench-2 is an open initiative led by IBM and ServiceNow in collaboration with MBZUAI, NASA, ESA Φ-lab, Technical University Munich, Arizona State University, and Clark University. This is done under the AI Alliance Climate & Sustainability Group. Full release of datasets and leaderboard on Hugging Face will be provided soon, alongside a comprehensive technical report. This will explain how we obtained our results, a number of ablation studies, and considerations around model capabilities against number of parameters and pretraining dataset size. Stay tuned and help us shape the future of geospatial AI!

“By providing a shared yardstick, GEO-Bench-2 helps researchers, companies, and society navigate toward a smarter, more sustainable planet.”