Vinh Luong, R&D & Open-Source Lead, Aitomatic

Annie Ha, Head of Communications, Aitomatic

In our rapidly evolving, information-driven world, professionals across diverse fields need instant, precise, and tailored answers to complex questions. From doctors seeking the latest treatment options to financial analysts needing insights into market trends, the demand for accurate, domain-specific information is higher than ever. However, current AI systems frequently fall short in providing the depth and accuracy demanded for expert-level decision-making, leading to significant inefficiencies and missed opportunities.

To address this challenge, the members of the AI Alliance tools working group have conducted a comprehensive study on best practices for advancing domain-specific Q&A using retrieval-augmented generation (RAG) techniques. The findings of this research, published at https://arxiv.org/abs/2404.11792, provide valuable insights and recommendations for AI researchers and practitioners looking to maximize the capabilities of Q&A AI in specialized domains.

"The AI Alliance is creating an ecosystem for open innovation and collaboration in AI, unlocking its potential to benefit society" – Anthony Annunziata, Head of Al Open Innovation and the Al Alliance at IBM

Game-Changing Techniques to Enhance Q&A Systems

The study investigates various techniques, including domain-specific fine-tuning and iterative reasoning, to significantly enhance the performance of RAG-based Q&A systems.

- Fine-Tuning: This technique involves customizing the embedding model used in RAG’s retrieval step, the generative model used in RAG’s generation step, or both, in order to make such models grasp the unique nuances of specific domains. Fine-tuning embedding models enables them to retrieve more relevant information by understanding domain-specific terminology and context. Likewise, fine-tuning generative models enhances their ability to produce accurate and contextually appropriate answers.

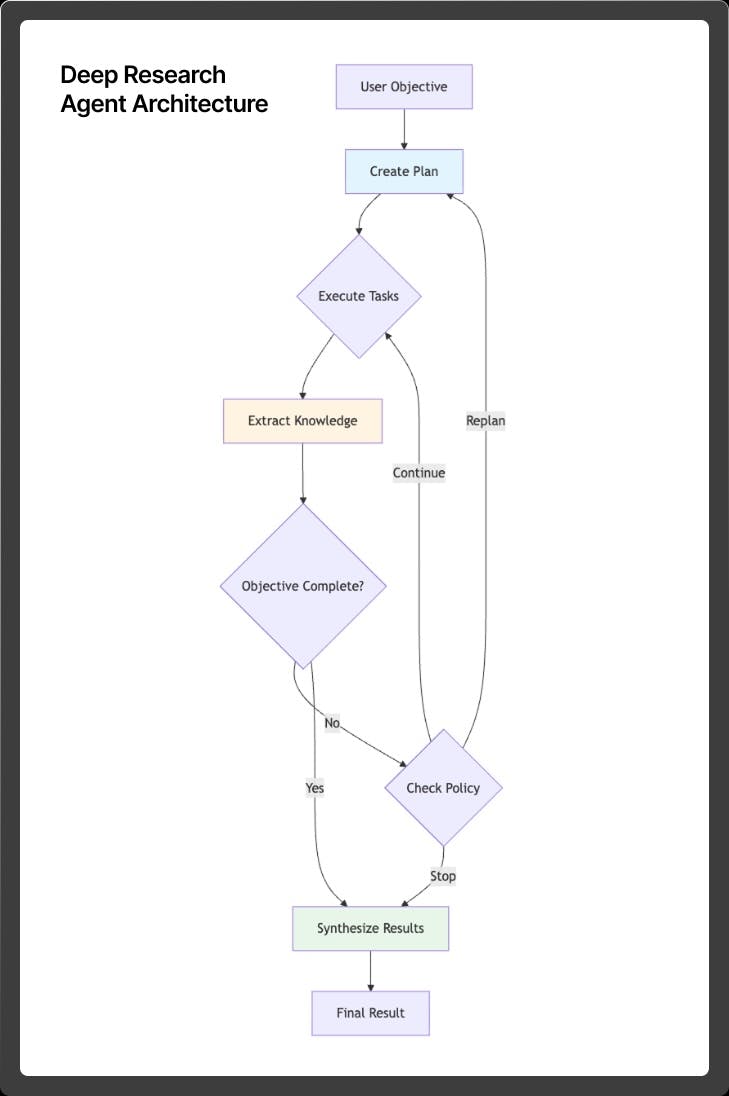

- Iterative Reasoning: This technique leverages the Observe-Orient-Decide-Act (OODA) paradigm to refine the AI's answers through multiple steps, continuously improving accuracy and relevance. The loop involves: (i) Observing relevant information from available resources; (ii) Orienting whether such collection of information is sufficient to solve the problem at hand; (iii) Deciding whether to solve the problem directly in one go, or to decompose it further into more manageable sub-problems; and (iv) Act to execute such decision, and update the status of the problem-solving process. This iterative process enables the AI to consider multiple perspectives, gather additional information, and adjust its answers, much like human problem-solving.

"Our research provides best practices for advancing domain-specific Q&A using retrieval-augmented generation, accelerating AI systems that understand specialized knowledge." – Zooey Nguyen, AI engineer & author from Aitomatic

Putting the Techniques to the Test with FinanceBench Dataset

To assess the effectiveness of fine-tuning and iterative reasoning, the researchers conducted experiments using the FinanceBench dataset. This is an open-sourced subset of a comprehensive collection of 10,000 financial-analysis questions about publicly traded companies, based on public company filings with the U.S. Securities and Exchange Commission (SEC).

The experiments compared various Q&A system configurations, including generic retrieval-augmented generation (RAG), fine-tuned RAG, and RAG with OODA reasoning. The performance of each system was evaluated using several automated and human-evaluated metrics, including retrieval quality and answer correctness.

Key Findings: Fine-Tuning and Iterative Reasoning Deliver Impressive Results

The results showed that fine-tuning significantly improved retrieval accuracy and answer quality. Notably, fine-tuning the embedding model used in RAG’s retrieval step resulted in higher accuracy gains compared to fine-tuning the generative model.

Additionally, integrating iterative reasoning with the OODA loop yielded the highest performance improvements. The generic RAG with OODA reasoning configuration outperformed even the fully fine-tuned RAG, highlighting the critical role of iterative reasoning in enhancing Q&A systems.

Understanding and Applying What We Learned

The AI Alliance aims to empower the AI community by providing a structured analysis of these techniques and their contributions to Q&A performance, offering clear best practices for developing domain-specific Q&A systems.

- Prioritize Fine-Tuning of Embedding Models: This technique offers superior performance and resource efficiency compared to fine-tuning generative models.

- Employ Iterative Reasoning Mechanisms: Use OODA reasoning or other iterative methods to significantly enhance the Q&A system's ability to combine information from multiple sources and improve informational consistency.

- Map Out a Structured Technical Design Space: Identify the components with the most significant impact on Q&A system performance. Create a structured design space to capture possible configurations and make informed decisions based on quantitative results.

The Power of Open Innovation and Collaboration: A Future of Precise Answers and Progress

"By promoting open-source tools and collaborating on their development, we're empowering the AI community to create powerful, adaptable, and responsible AI systems." – Adam Pingel, IBM Head of Open Tools and Applications, The AI Alliance

The Alliance’s work on domain-specific Q&A best practices showcases the immense potential of open innovation and collaboration in advancing AI technologies. By bringing together diverse talents and fostering knowledge sharing, the Alliance is accelerating progress and empowering the AI community to develop cutting-edge solutions.

As the AI Alliance continues its mission, the future of domain-specific Q&A systems is bright. By embracing the AI Alliance's best practices and nurturing a culture of open collaboration, the AI community can unlock the full potential of these specialized AI tools, transforming them into indispensable assets across industries.